If you’ve used docker, you’ve used container registries. Registries are a defacto part of the workflow, streamlining development, deployment, and operations. When a container host (Kubernetes, ACI/Fargate, Machine Learning, IoT, …) is requested to run an image, it must pull the image from a registry. As containers can be scaled and failed over, the host must pull the image from the registry. Since registries are not just a development resource, but a production operational dependency, all major cloud vendors have implemented docker/distribution with their cloud-specific storage and security semantics. With the volume of development and production activity, these registries are hardened, secured, highly scalable and available.

Once you get past single container deployments, we quickly realize we additional artifacts to define deployments. Those artifacts range from Kubernetes Deployment Files, Helm Charts, docker-compose, CNAB, Terraform, ARM & Cloud Formation Templates, and other evolving formats. If containers are becoming the common unit of deployment for software, why not use a registry to store, secure and maintain these new artifacts?

Registries as Cloud File Systems

Today, we generally think of registries as serving one file type – docker images. While developers have found creative ways to leverage the underlying manifest and layered blob delivery system, what we’re talking about here is abstracting at a higher level. Because registries were designed to support small to really large “files”, with security and a layered, cross-referenceable file system, that works across the internet and within a datacenter, registries can support just about any artifact type. When we expand from docker images, helm charts, and CNAB, to any artifact type we have an interesting explosion of possibilities and capabilities.

The benefits of registries over blob storage is the ability to reference artifacts consistently. Artifacts that my contain references to other shared artifacts. Versioning is built into the :tag schemes. And, if we get this right, it will work consistently across cloud vendors.

We can learn from file systems, including the ability to differentiate different artifact types by their extension, represented as an icon. The artifact type has capabilities such as how to deploy it, scan it, compare different versions because we know the specific artifact type.

Built and Acquired Artifacts

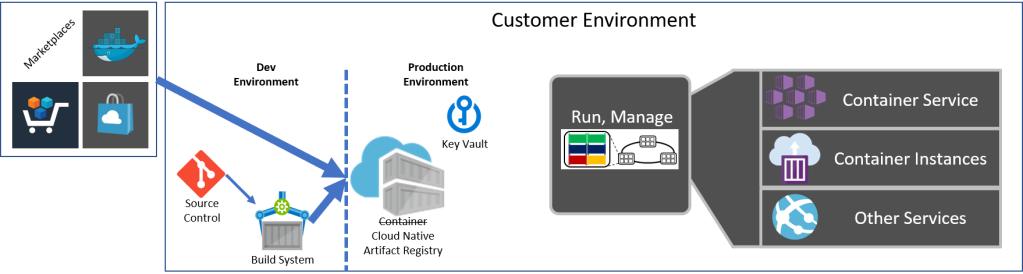

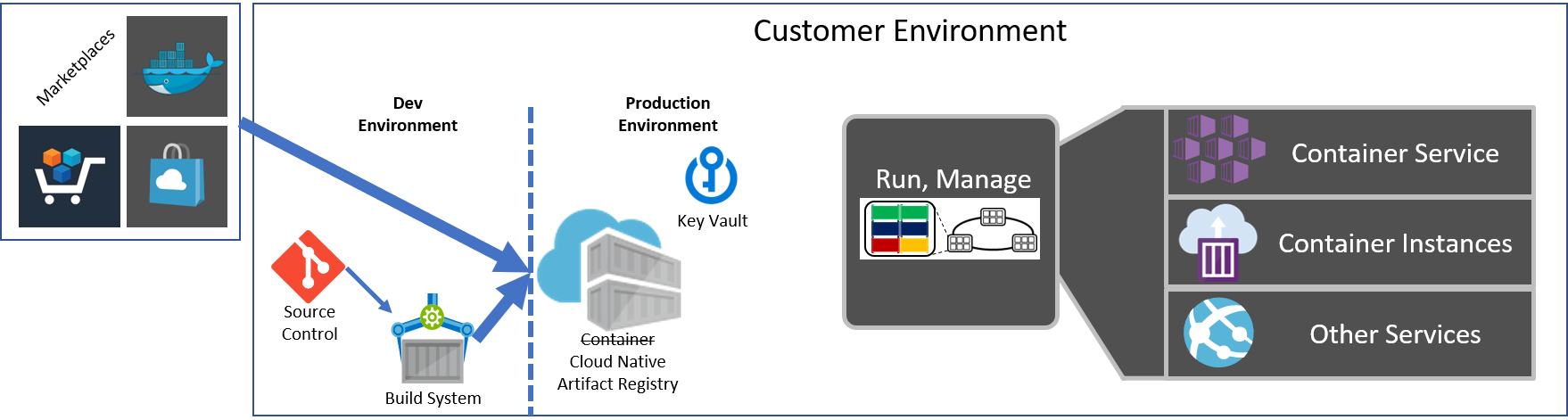

Will you build every image you deploy? I’m not talking about individual services or technology stacks. What happens when we start deploying ISV software packages, acquired from marketplaces? These may be acquired by business people, that have no idea what a build system is, nor should they.

In the above example, artifacts (including images, helm charts, CNAB, ARM & Cloud Formation Templates…) are either built by an engineering team within the company or acquired externally. As a best practice, a customer should maintain a registry as part of their production environment, and never be dependent on an external resource. See: Choosing a docker registry for more details.

For companies that build software, source control and a build system are key. However, while these resources are important, they’re not typically part of the production environment, nor have geo-redundant failover. Or, for companies that vendor out their development, they may not have source control or build systems at all.

The main point; registries are part of the production environment, are secured, reliable, replicated, etc. Container hosts are configured with registry access, to enable deployments. While dev environments are extremely important if they have them, they’re not typically in the production reliability path.

Common Tooling Across All Registries

One of the many powerful features of the docker CLI is its ability to work across any docker/distribution based registry. Developers can use docker run across Azure, AWS, Google, on-prem servers and local development machines. As new artifacts surface, such as Helm and CNAB, wouldn’t it be nice if the same could be said for their CLIs?

Azure Container Registry (ACR) added Helm Chart support a few months back. Working with Josh Dolitsky (Chart Museum & Codefresh), we worked through the details of enabling the experience as best as possible. Rather than run an instance of Chart Museum, we felt it was important to leverage the infrastructure of ACR. Including authentication and geo-replication What we found was challenges in serving charts at scale, and how difficult and unique it was to add a new artifact to a registry. We worked with the Helm maintainers, submitting: Helm Repos and Container Registry Convergence Proposal. The proposal suggests referencing charts as we reference docker images:

helm upgrade hello-world demo42.azurecr.io/hello-world:1.0

To achieve this, all registries either need to implement helm specific handlers, or the registries support additional artifact types, and helm just references a registry as a specific artifact.

Having just completed ACR Helm Repo support, CNAB was being released. Do we need to go through this cycle again just to support CNAB? What about all the other new artifact types?

OCI Distribution and Artifact Types

As it turns out, the OCI Distribution spec accounted for different artifact types. Stephen Day, and other OCI maintainers had thought long and hard about avoiding docker/oci registry apis from being artifact specific. We came across Jimmy Zelinskie and other members of Quay.io that implemented app registry, dealing with many of these challenges. It was time to tap this into this ability and a group of folks has been collaborating over the last several weeks to enable registries as cloud-native artifact stores.

Library for OCI Registry Artifacts

While most of us checked out over the holidays for some much-needed rest, there were a few people that tapped into their creative process and wanted to test the Helm Repos and Container Registry Convergence Proposal.

Josh Dolitsky was eager to jump in. Rather than start from scratch,

Shiwei Zhang took the server side code we used for ACR Helm Repos and refactored it to be a client library, taking advantage of the OCI Distribution design. With some quick iterations, they made a lot of progress, and Josh was able to demonstrate helm pull. At the same time, the folks at Docker were iterating on Docker Application, using CNAB. While we still need to converge elements of these two proposals, we agreed to converge on a common library to enable various artifact types to be stored in a registry. ORAS – OCI Registry As Storage is now available.

Cloud Native Artifact Stores are a Primitive

Just as each cloud offers VMs, Storage, Authentication, we believe Cloud Native Artifact Stores are yet another commodity that should be ubiquitous across clouds. The goal isn’t to compete at this artifact layer, but enable ISVs, Partners, and Developers to build atop the artifact store. By enabling CLIs to work commonly across various clouds, we empower teams to build better services and experiences all users can benefit from. Just as ISVs have built experiences over the Kubernetes API and Docker Image Registries, we hope to see artifact products use this common infrastructure for CI/CD, Security Scanning and other ideas others will come up with.

Just the Beginning

We’re still early in this process, and we’re sorting through many details to assure we’ll have an easy on-road to supporting new artifact types in registries. We hope to ship registry support with Helm 3 and CNAB later this year and see additional artifacts enabled. We’re hoping registry artifact support will expand beyond Azure, Quay and Docker Hub, and we hope enabling artifacts as a cloud primitive will enable vendors and the community to build great tools. We’re excited to see where this is going, and what creative minds might do if they can focus on building new artifacts, and not worry about having to build a distribution system for their various artifacts.

For all the people not mentioned that contributed to this moment, thank you! While this started as a Docker Inc project, it’s their involvement with people and the community that enables us to do such powerfully enabling projects.

For more info on Helm and CNAB repositories, see Distributing with Distribution

Thanks,

Steve